📝 Stateless BGP Anycast Architecture for AI Inference

Overview

This document outlines a scalable, secure, and highly available architecture for deploying stateless AI inference workloads in a data center environment using BGP Anycast and ECMP-based load balancing.

Use Case Background

This use-case is hypothetical and serves as a demonstration of how stateless inference workloads can be deployed effectively using BGP Anycast in a modern data center.

The example scenario involves a medical AI provider delivering real-time diagnostics from AI-based image analysis, showcasing the use of stateless inference and intelligent routing within a high-performance, redundant infrastructure.

Core Requirements

- Security: No session state or persistent storage of user data

- Redundancy: Full path and node-level failover

- High Availability: Distributed load handling through ECMP

- Performance: Optimized for low-latency, high-bandwidth inference

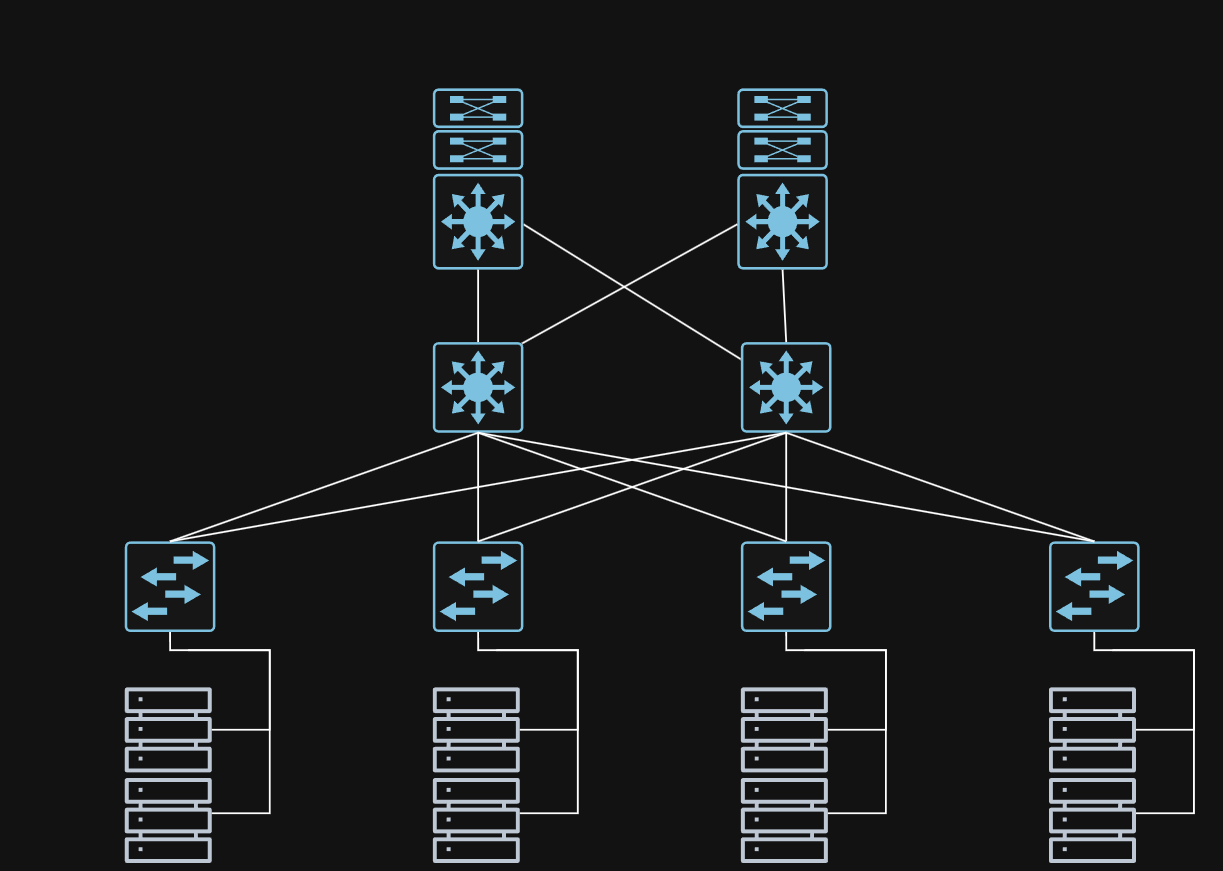

Topology Summary

- Leaf-Spine architecture

- Dual-connected servers per rack using MLAG

- Each server connects to two ToRs (Leafs)

- Spines interconnected with all ToRs and Core routers

- Core routers provide external connectivity with 200Gbps+ links

Data Flow

- Clients access the inference service through a public-facing application via traditional unicast routing.

- Traffic enters the data center through dual Core routers, which perform ECMP to distribute flows across Spine switches.

- Spine switches forward traffic to the appropriate ToRs based on ECMP forwarding tables.

- ToRs distribute traffic to locally connected inference servers using ECMP.

- Each server maintains a BGP session with both its ToRs and advertises the Anycast VIP (e.g., 10.10.10.10/32).

- The server processes the request statelessly and returns the result to the client through the same ECMP-based path.

This multi-stage ECMP routing ensures fast, balanced, and resilient delivery of stateless inference responses.

Routing Behavior

- Each server advertises a VIP (e.g., 10.10.10.10/32) to its dual-connected ToRs via BGP, enabling dynamic routing control and simplified failover.

- ToRs aggregate these routes and advertise the VIP to Spines using eBGP.

- Both ToRs and Spines have BGP multipath enabled to support ECMP across multiple paths.

- Flow-based ECMP ensures packets for a given flow are routed consistently.

High Availability & Redundancy

- Dual ToR connections per server with MLAG ensures local failover.

- BFD (Bidirectional Forwarding Detection) and UDLD (Unidirectional Link Detection) accelerate failure detection.

- Spine and Core layers are fully meshed to support upstream and downstream path diversity.

- ECMP enables load-sharing and fast rerouting at each hop.

Security Model

- The inference service is stateless — each request is processed in isolation with no retained context.

- BlueField-3 SmartNICs provide hardware encryption and compute offloading.

- The network design eliminates the need for session-based state retention, reducing exposure.

Observability (Optional Enhancement)

- ECMP lacks native insight into traffic distribution.

- Tools such as sFlow or IPFIX can be deployed at ToRs or Spines to:

- Identify flow concentration

- Detect ECMP imbalance

- Monitor network-level anomalies

- These are not required but recommended for environments demanding operational visibility.

Benefits

- Stateless operation simplifies compliance and reduces attack surface

- Horizontal scalability through dynamic VIP advertisement

- High availability and failover via ECMP, BFD, and dual-path redundancy

- Policy control via BGP enables future extensibility and automation

Drawbacks & Considerations

- ECMP distribution is dependent on flow entropy; NAT or proxy environments may reduce balance effectiveness

- Observability requires additional tooling (e.g., flow monitoring collectors)

- ECMP does not consider server load (CPU, memory) — it's purely routing-based

Validation and Test Plan

BGP and VIP Reachability

- Confirm BGP sessions are established between servers and both ToRs.

- Verify VIP (10.10.10.10/32) is advertised from servers to ToRs and propagated to Spines.

ECMP Load Balancing

- Ensure multiple next-hops exist for the VIP across ToRs and Spines.

- Validate flow-based ECMP is distributing traffic evenly under normal load.

Redundancy and Failover

- Simulate server or ToR failure and confirm BGP withdrawal and traffic rebalancing.

- (Optional) Verify BFD reduces failover detection time to sub-second intervals.

Return Path Consistency

- Confirm server responses are routed correctly through the ECMP fabric without loss or reordering.

Basic Security Validation

- Verify only authorized BGP speakers are accepted and route integrity is maintained.

Future Improvements

- Integrate health checks into route advertisement (e.g., tie FRR BGP to model/container state)

- Use IPFIX/sFlow with collectors for ECMP validation and flow tracking

- Evaluate StackWise Virtual or dual-ToR architecture per rack for full ToR-level fault tolerance

This document serves as a formal design reference for stateless BGP Anycast inference architecture. It may be used as a deployment blueprint, technical proposal, or educational artifact, and can be adapted for publication depending on audience or platform.